- Created by Michael Kosinski on Jul 08, 2024

You are viewing an old version of this page. View the current version.

Compare with Current View Page History

Version 1 Current »

General Information

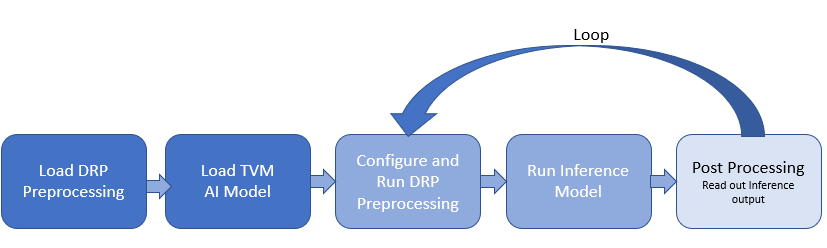

This is a sumary of how to run a TVM model on the RZV board. The code snippest are based on the TVM Tutorial Application that can be found here. The TMV Runtime Inference API is part of the EdgeCortix MERA software. The API for the EdgeCortix MERA softwre is defined in the header file MeraDrpRuntimeWrapper.h. The DRP-AI pre-processing is defined in the TVM Applicaton Document appendix here .

It is requred that TVM applications be deployed as follows.

/

├── usr

│ └── lib64

│ └── libtvm_runtime.so

└── home

└── root

└── tvm

├── preprocess_tvm_v2ma

│ ├── drp_param.bin

│ ...

│ └── preprocess_tvm_v2ma_weight.dat

├── resnet18_onnx

│ ├── deploy.json

│ ├── deploy.params

│ └── deploy.so

├── sample.yuv

├── synset_words_imagenet.txt

└── tutorial_app

- libtvm_runtime.so

- This is the TVM Runtime Library.

- This is required to run any TVM AI Models.

- This file is located ${TVM_ROOT}/obj/build_runtime (RZV2L, RZV2M,RZV2MA)

- preprocess_tvm_v2xx

- This is the pre-compiled DRP pre-processsing files. This is required to run the pre-process code on

- The name of the direcory depends on the RZV MPU used ( _v2xx = l, m, or ma )

- These directories are located : ${TVM_HOME}/app/exe

- Only the the directory for your MPU needs to be compied. (i.e. the example above only copies the v2ma directory)

- xxxxxxx_onnx directory

- This is the location output files of the TVM Translator

- The name of the directory

- Application

- Optional files: These files depend on the Model used

- sample.yuv

- This is the input YUV image used for TVM tutorial applicaion demo.

- synset_words_imagenet.txt

- This text file list the names of the Resnet classifications. This is used for post-processing to display the name

- sample.yuv

Initialization

Step 1) Load pre_dir object to DRP-AI. The pre-processing object directory pre_dir contains the Renesas pre-compiled files located in the ${TVM_ROOT}/apps/exe/preprocess_tvm_<v2xx> (v2l, v2m, v2ma).

- preprocess_tvm_v2m

- preprocess_tvm_v2ma

- preprocess_tvm_v2la

preruntime.Load(pre_dir);

Step 2) Load TVM Translated inference model directory and its weight from the model_dir to runtime object.

runtime.LoadModel(model_dir);

Step 3) Allocate continuous memory for the Camera capture buffer. Pre-processing Runtime requires the input buffer to be allocated in contiguous memory area. This application uses imagebuf (u-dma-buf) contiguous memory area. Refer for this page about the Linux Contiguous Memory Area here.

Run TVM Inference

This section describes the steps to run the TVM AI model. It is recommended that this section and the Post-processing section run in a thread. In-addition the Camera Capture should also be run in a separate thread.

Step 1) Read camera image. Refer to TVM code example. Step 2) Set Pre-processing parameters and run the pre-processing model. The DRP Pre-processing operations are format conversion ( YUV422 to RGB ), Resize, and input normalization.

preproc_param_t in_param; in_param.pre_in_addr = udmabuf_addr_start; in_param.pre_in_shape_w = INPUT_IMAGE_W; in_param.pre_in_shape_h = INPUT_IMAGE_H; in_param.pre_in_format = INPUT_YUYV; in_param.resize_w = MODEL_IN_W; in_param.resize_h = MODEL_IN_H; in_param.resize_alg = ALG_BILINEAR; /*Compute normalize coefficient, cof_add/cof_mul for DRP-AI from mean/std */ in_param.cof_add[0]= -255*mean[0]; in_param.cof_add[1]= -255*mean[1]; in_param.cof_add[2]= -255*mean[2]; in_param.cof_mul[0]= 1/(std[0]*255); in_param.cof_mul[1]= 1/(std[1]*255); in_param.cof_mul[2]= 1/(std[2]*255); ret = preruntime.Pre(&in_param, &output_ptr, &out_size);

Step 3) Set Pre-processing output to be inference input.

runtime.SetInput(0, output_ptr);

Step 4) Run Inference. This is a synchrous function call that waits on the completion of the TVM Runtime. I

runtime.Run();

Post processing

Becasse TVM off loads operation to the CPU the **final** output datatype could be Floating point 32 (FP32) or Floating point 16 (FP16). The FP32 is the final output from the CPU. The FP16 datatype is the final ouput from the DRP.

Step 1) Read data from DRP memory to an array. This is informaiton is done as follows:

/* Comparing output with reference.*/

/* output_buffer below is tuple, which is { data type, address of output data, number of elements } */

auto output_buffer = runtime.GetOutput(0);

int64_t out_size = std::get<2>(output_buffer);

/* Array to store the FP32 output data from inference. */

float floatarr[out_size];

datatype == FP16

if (InOutDataType::FLOAT16 == std::get<0>(output_buffer)) {

/*

Read the dataout and convert to FP32

*/

}

datatype == FP32

if (InOutDataType::FLOAT32 == std::get<0>(output_buffer)) {

/*

Just Read to memory

*/

}

Step 2) Parse the output for Classification and/or Boundary box The output depends on the AI model used. The TVM Demo **tutorial_app** demonstrates the RESNET Model. The output of this classification models is an array of 1000 classification. Simple fined the highest confidense value of the array. Last check if max confidense value is above a defined threshold.

- No labels

- Powered by Atlassian Confluence 8.5.15 (oefconfl001p: 3778f98f)

- Printed by Atlassian Confluence 8.5.15

- Report a bug

- Atlassian News